Sensing Violet: The Human Eye and Digital Cameras

Both the human eye and digital cameras use sensors that are sensitive to blue, green, and red light, and yet we and the cameras properly sense light of other colors. Of course, what is happening is that the human and camera systems use the output from more than one sensor type to determine the wavelength of the light. What is surprising however, is the fact that humans distinguish colors in and beyond the blue region not with the the "blue" and adjacent "green" sensors, but with the "blue" and "red" sensors.

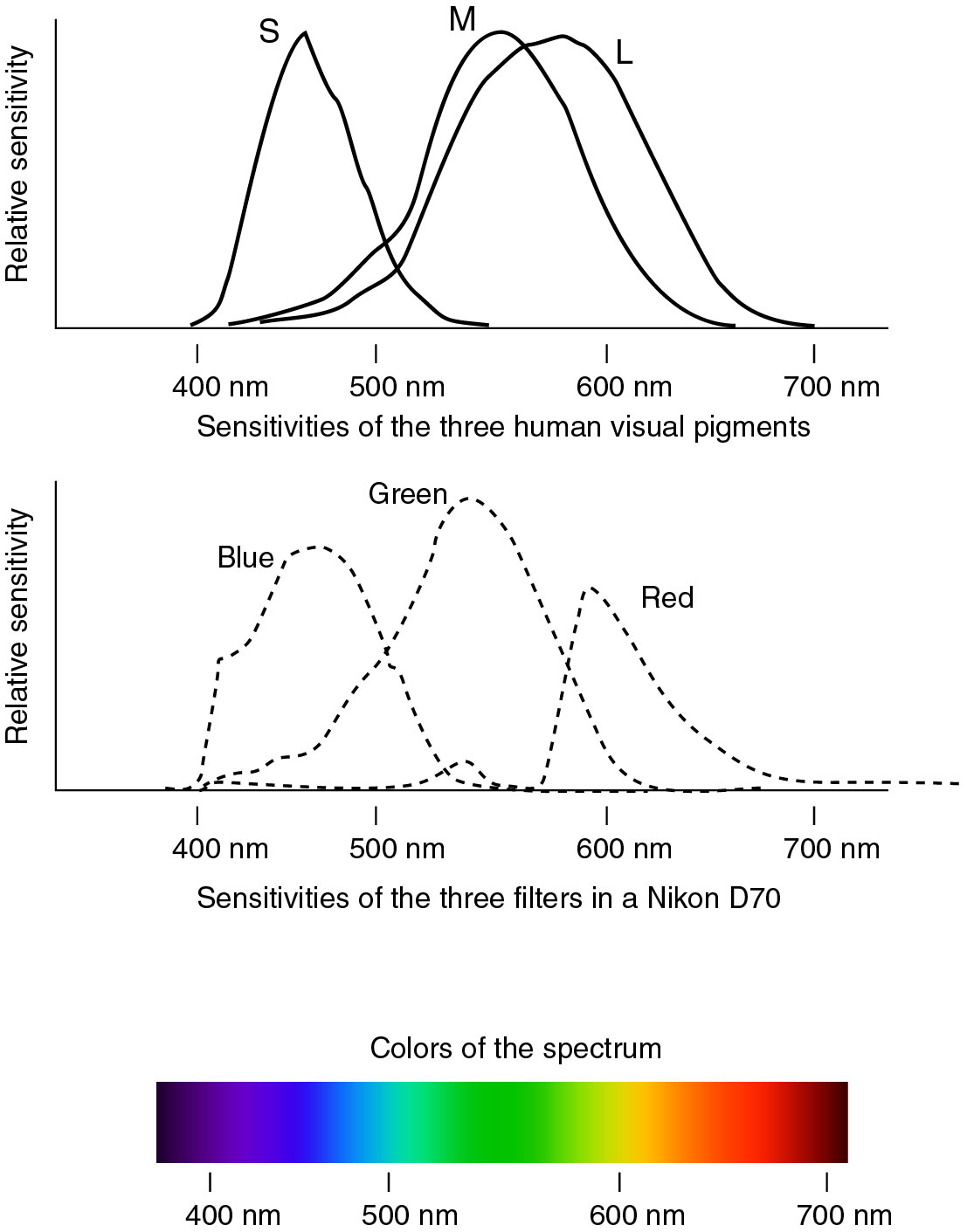

Visible light possesses wavelengths from 380 nm, violet, to about 700 nm, red, and contains the colors normally named violet, indigo, blue, green, yellow, orange, and red. The three types of color sensors in the human eye respond most strongly to blue, green, and yellow light as shown below, and they are usually referred to as the short, medium, and long, S, M, and L receptors.

When yellow light strikes the retina, both the M and S cone cells are nearly equally stimulated and the ratio between the two signals determines the color perceived. This information is coded and sent to the brain on a channel called the red-green opponent channel. Red light more strongly stimulates the red-sensitive cone cells than the green-sensitive cone cells, and the brain processes this ratio as a shade of red. Colors at the other end of the spectrum similarly are coded by the ratio of the responses. This time the blue-predominantly responds, but the green-sensitive and red-sensitive cone cells also respond somewhat. A signal deriving from the blue-sensitive cone cells is sent to the brain on the yellow-blue opponent channel. In addition, a signal deriving from the red-sensitive cone cells is also sent, this time over the red-green opponent channel. The brain then interprets the combination of the two signals as violet, blue, or cyan.

To determine light's color in the violet-blue region of the spectrum either the red or green sensor would suffice in addition to the blue sensor if the shape of the response of the red or green sensor were different from that of the blue sensor. One would expect that the sensitivity of the red sensor to blue light would be considerably less than that of the green sensor. Hence, one would expect that the green sensor would be used. It turns out that in the blue region of the spectrum the sensitivities of the red and green sensors are so similar that it matters little which is used. Humans happen to use the red sensor. How do we know that humans use the red sensor? The simple demonstration is that individuals lacking red-sensitive cone cells, protanopes, in addition to being insensitive to red light, cannot distinguish violet, blue, or cyan.

Digital cameras distinguish colors in about the same was as the human eye. Most likely however, distinguishing colors at the blue end of the spectrum utilizes the blue and green sensors rather than the blue and red sensors used in humans. The ratio of the signals from the pixels covered with red, green, and blue filters are used to determine the color of the light. As in humans, it is the combination of the spectral sensitivities of the filters and the algorithm used to interpret the ratios of the signal strengths as colors that determine the camera's actual color response. Camera image files in the raw format contain the individual pixel responses and also a profile that tells the raw conversion program what colors various ratios correspond to. The presence of the profiles in the raw files allows different cameras to use color filters with different spectral responses. It is no surprise then, that the spectral responses of the filters used in digital cameras differ from the pigments in the human eye. This is shown in the figure above.

Although the human eye and digital cameras both use a three-color sensor, there is no fundamental reason why a digital camera could not use just two color filters. In order that all wavelengths of the spectrum be distinguishable by such a camera, one filter must have a sensitivity peak at about 400 nm with decreasing sensitivity to increasing wavelengths, all the way to about 650 nm. The other filter would need to be maximally sensitive at about 650 nm with decreasing sensitivity all the way out to 400 nm. It would probably also be necessary that wavelengths below 400 nm and above 700 nm be excluded by additional filters.